LEARNING- FREE OBJECT DETECTION AND LOCALIZATION

|

MOTIVATION

Intelligent warehouse is a promising area, and predicting object poses is a critical task.

|

In the scenario of smart warehousing, it generally has the following characteristics:

|

Current mainstream object detection and location approaches rely on neural networks, which may have the following problems:

[1] For each new scenario, a corresponding training data set needs to be established (Which could be expensive, especially for 6DOF pose labeling).

[2] When there is a change in the scene (Eg: the light becomes stronger), it is necessary to RE-establish the data set and RE-train a new neural network.

Observation

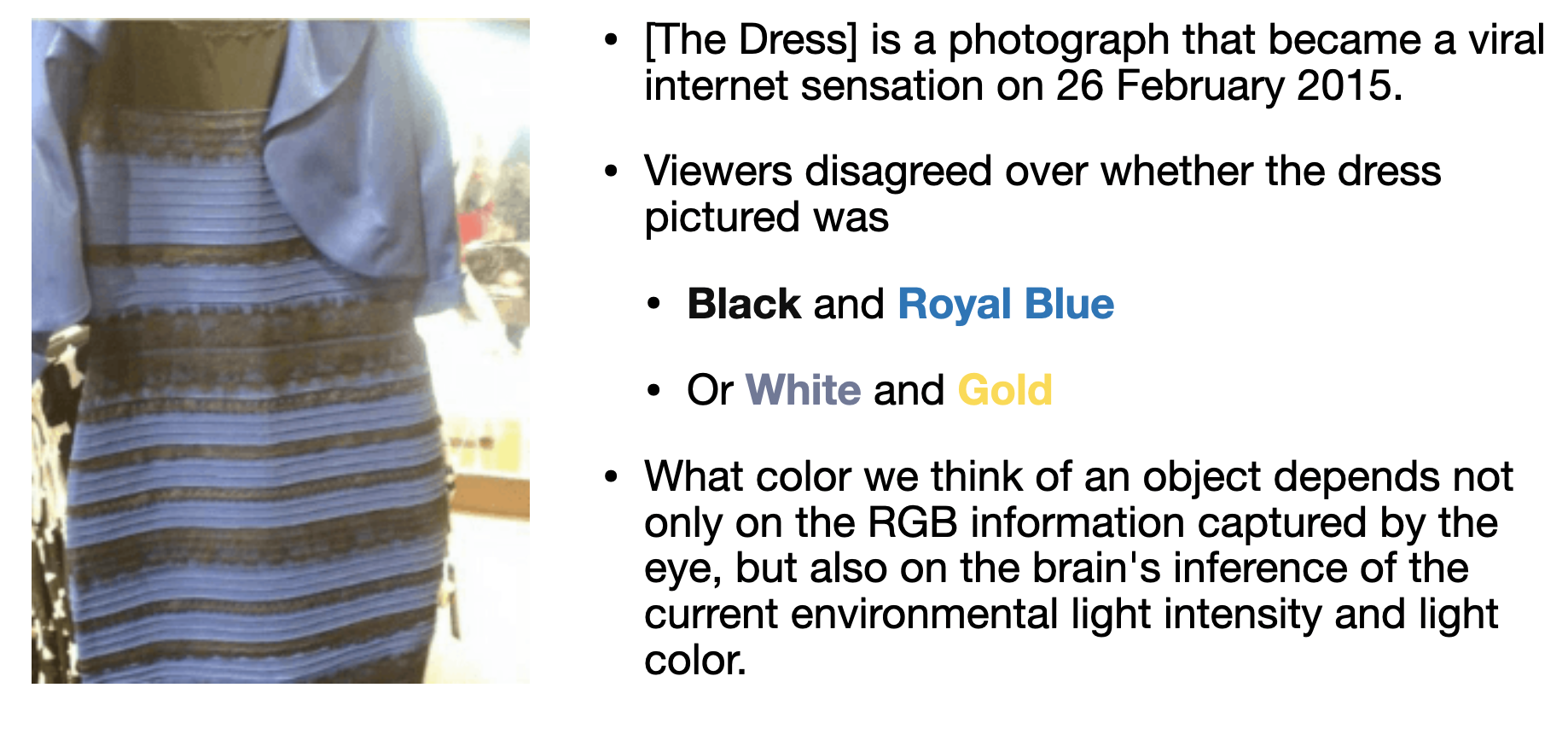

I revisited the way neural networks recognize objects.[1] Color information, provided by RGB images and label data.

[2] Pattern information, that is, the surface texture information of the object of interest.

|

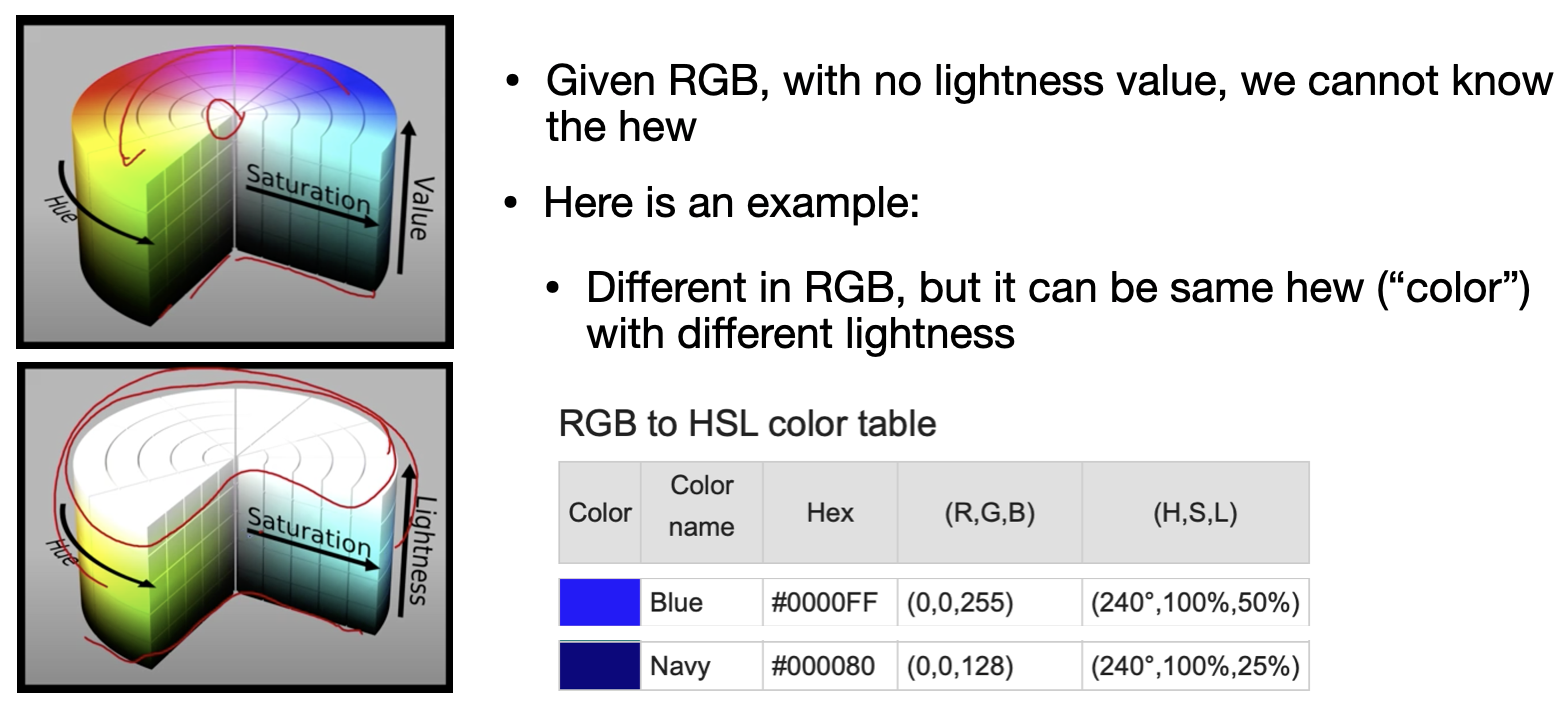

Interestingly, in different luminance situations, different hues may show the same RGB.

|

PROPOSED APPROACH

|

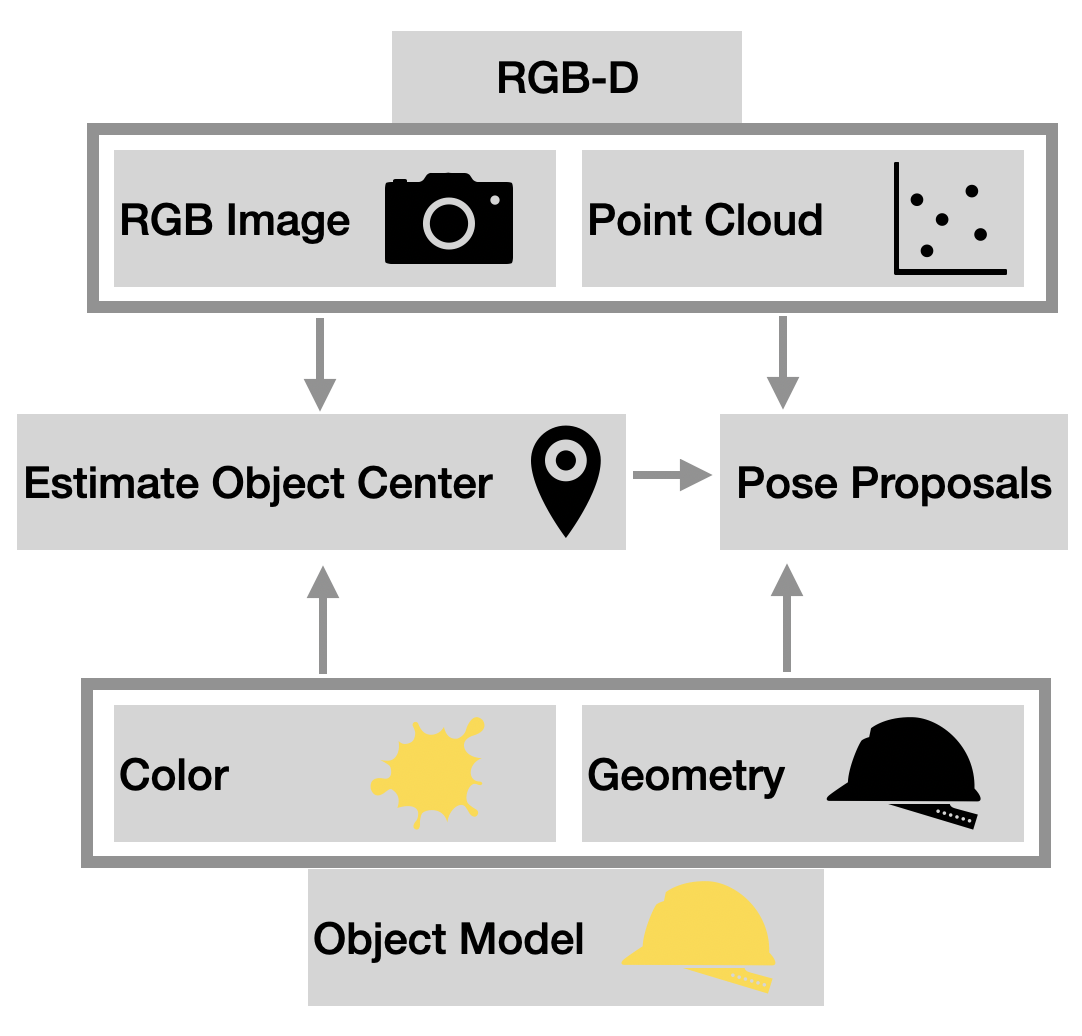

In order to solve the above-mentioned problems, we propose an object detection method based on RGB-D data and object 3D model.

|

ONGOING PROJECT

Build New Object 3D Model

Collect RGB-D Data

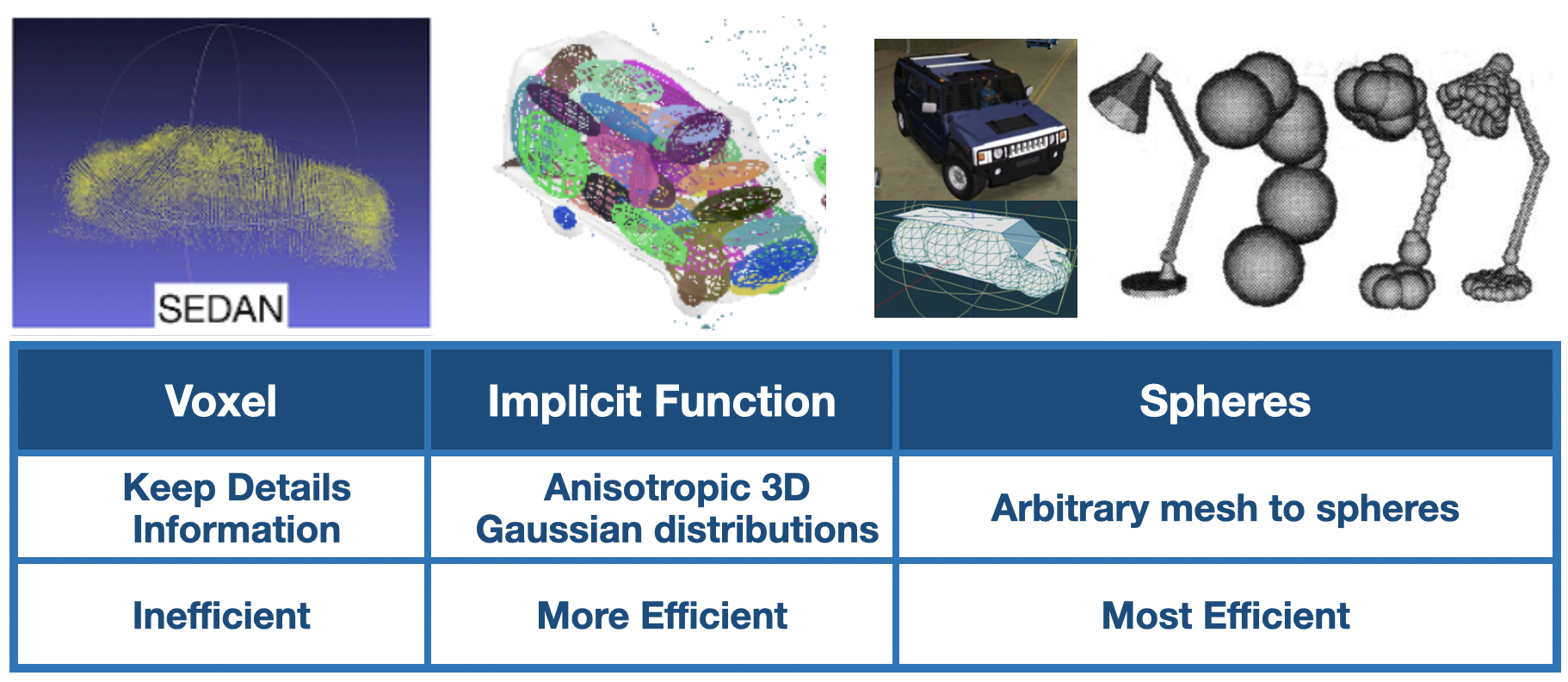

[Interesting Topics] Geometry Representation

Along with the research, since we were always chasing real-time performance, describing geometry information efficiently is an essential task for us.

|